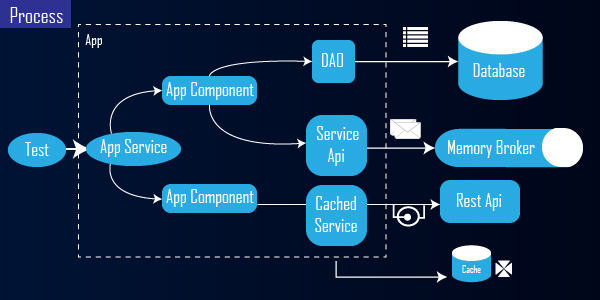

White box testing tests the resilience of the internal, and external systems of an application by evaluating the source code thoroughly. Yes, the source code will be given to the ethical hacker who performs the testing.

No, it is not easier than a black box or a grey box penetration testing since the source code is given. It is a complex and time-consuming process. To understand that, first, let’s get clear on how white box penetration testing works.

Working Of White Box Penetration Testing.

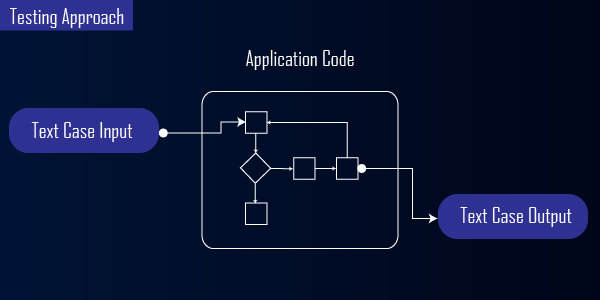

This involves three steps.

First, the ethical hacker or the security analyst will read and understand the source code including every line, branch, segment, function, etc. This requires a high skill set and a knack for precision.

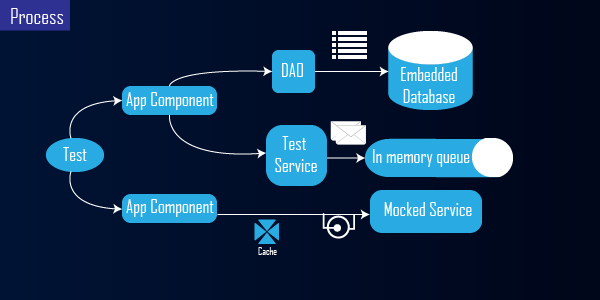

Secondly, he or she would make a test case input to assess the internal system and find bugs, vulnerabilities, and internal errors. The input test case will have detailed functional specifications with respect to the source code, security specifications and specific requirements.

After obtaining the test case output they would prepare a detailed report which includes the testing activity, outcome, the vulnerability found and the source of the vulnerability.

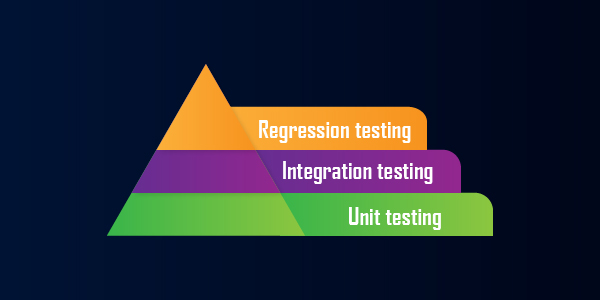

Three levels of White Box Testing

An ethical hacker conducts white box testing at three levels. It is also known as the three methods of white box testing. They are the following:

- Unit testing

- Integration testing

- Regression testing

Unit testing

To satisfy a function in the software individual components are made and are called units. Every unit is tested individually to make sure they are performing as needed and developed. The flaws in this level are easy and cheap to fix. This level of testing is mostly done in the development stage of an application and only after this test the code can be regarded as complete.

Unit testing is the first level of testing performed before integration and the remaining layers of testing in a testing hierarchy. It uses modules for testing, which lowers the need to wait for results. Unit testing is aided by unit testing frameworks, stubs, drivers, and mock objects.

Guidelines for achieving the best out of Unit testing

By following the techniques outlined below, unit testing can provide the best results while avoiding confusion and increasing complexity.

- Because the test cases will not be affected if the requirements alter or improve, they must be independent.

- Unit test cases should have clear and consistent naming conventions.

- Before moving on to the next phase of the SDLC, the defects found during unit testing must be rectified.

- At any given time, just one code should be checked.

- If you don’t include test cases with your code, the number of execution routes will grow.

- Check whether the relevant unit test for that module is accessible or not if the code of that module is changed.

Advantages

- Unit testing assists testers and developers in understanding the foundation of code, allowing them to swiftly update defect-causing code.

- The documentation is aided by unit testing.

- Unit testing identifies and corrects errors early in the development process, which means that the number of defects in subsequent testing levels may be reduced.

- By relocating code and test cases, it aids with code reuse.

Disadvantages

- Because it only operates on code units, it is unable to detect integration or broad-level errors.

- Unit testing does not allow for the examination of all execution pathways, it is unable to catch every error in a program.

- It works best when combined with other testing activities.

Integration testing

Units as groups are meant to accomplish a definite function. Integration testing is done to make sure that those groups process, calculate and manipulate the data and the technical details to achieve a specific output.

Why integration testing?

Despite the fact that all modules of the software application have been unit tested, problems still exist for the following reasons:

- Each module is created by a different software developer, whose programming logic may differ from that of other modules. As a result, integration testing is necessary to assess how well software modules operate together.

- To determine whether or not the interaction of software modules with the database is correct.

- At the time of module development, requirements can be amended or improved. These new needs may not be able to be validated at the unit level, so integration testing becomes necessary.

- Incompatibility between software modules could lead to errors.

- To see if the hardware and software are compatible.

- It can lead to issues if exception handling is insufficient between modules.

Integration testing guidelines

- Only when we’ve completed functional testing on each module of the application, do we go on to integration testing.

- We always perform integration testing by selecting modules one by one to ensure that the appropriate process is followed and that no integration cases are missed.

- First, decide on a test case method that will allow you to create executable test cases based on the test data.

- Examine the application’s structure and design to find the most important modules to test initially, as well as all possible situations.

- Create test cases to thoroughly check interfaces.

- Choose the data that will be used to run the test case. In testing, input data is quite important.

- If any faults are discovered, we must report them to the developers, who will subsequently correct the defects and retest the product.

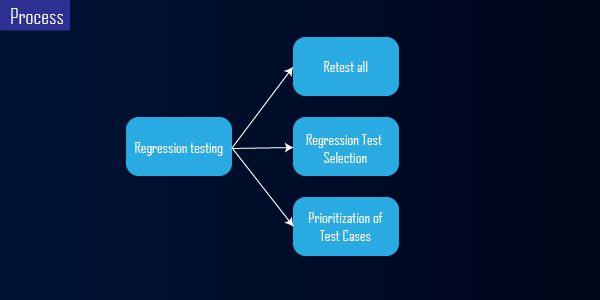

Regression testing

Regression testing is a type of software testing that verifies if an application continues to work as anticipated after any code revisions, updates, or improvements. The general stability and functionality of existing features are ensured via regression testing. These code modifications could include adding new features, resolving problems, or updating an existing feature.

What is Regression Testing and how does it work?

When software maintenance includes upgrades, error fixes, optimization, and deletion of existing functionalities, regression testing is required. These changes may have an impact on the system’s functionality. In this instance, regression testing is required.

The following strategies can be used to perform regression testing:

1. Re-test everything:

One method for performing regression testing is to use Re-Test. All test case suits should be re-executed in this method. We can define re-testing as when a test fails and the cause of the failure is determined to be a software flaw. After the problem has been reported, we can anticipate a new version of the software with the flaw rectified. In this instance, we’ll need to run the test again to ensure that the problem has been resolved. This is referred to as re-testing. This is referred to as confirmation testing by some.

The re-test is quite costly, as it necessitates a significant amount of time and resources.

2. Selection of regression tests:

Instead of executing a full test-case suit, this technique executes a single test-case suit.

The chosen test case is split into two parts.

- Test cases that can be reused: Test cases that are reusable can be used in subsequent regression cycles

- Test cases that are no longer valid: Test cases that are no longer valid cannot be used in subsequent regression cycles.

3. Test case prioritisation:

Prioritise the test case based on the business effect, important functionality, and frequency of use. The regression test suite will be reduced by selecting test cases.

The following are some of the benefits of regression testing:

- Regression Testing improves the quality of a product.

- It ensures that any bug fixes or modifications do not affect the product’s current functionality.

- Regression testing can be done with the help of automation technologies.

- It ensures that the issues that have been resolved do not recur.

Regression Testing has a number of advantages, but it also has certain drawbacks.

- Small modifications in the code should be tested because even a minor change in the code can cause problems with the present functionality.

- If testing is not automated in the project, it will be a time-consuming and tiresome effort to repeat the test over and over.

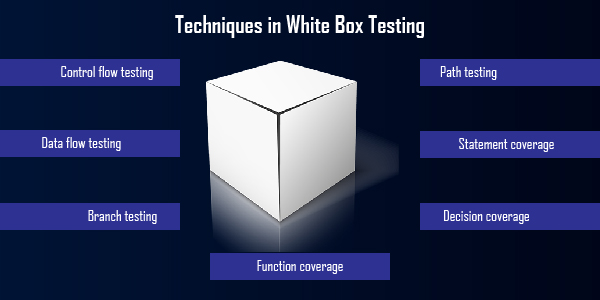

Techniques in White Box Testing

Code average is a widely used technique in white box testing. Code average helps understand the percentage of the source code that is tested by the test case input. The lower the percentage, the lesser the chance of an unidentified bug in the source code. The following are the techniques to calculate the code average.

- Control flow testing

- Data flow testing

- Branch testing

- Statement coverage

- Decision coverage

- Path testing

- Function coverage

Control flow testing

Developers frequently utilize this testing method to test their code and implementation because they are more familiar with the design, code, and implementation. This testing method is used to check the logic of the code to ensure that the user’s needs are met. Its primary purpose is to link minor programs and pieces of larger programs.

Process of Control Flow Testing:

The steps involved in the control flow testing procedure are as follows:

Control Flow Graph Creation: A control flow graph is constructed from the given source code, either manually or through software.

Coverage target: A coverage goal is defined across the control flow graph, which comprises nodes, edges, pathways, and branches, among other things.

Test Case Creation: Control flow graphs are used to construct test cases that cover the given coverage objective.

Test Case Execution: Following the creation of test cases that cover the coverage target, more test cases are run.

Analysis: Analyze the results to see if the software is error-free or if there are any flaws.

Data flow testing

Data flow testing is a set of test methodologies that involves choosing paths through a program’s control flow to investigate sequences of events involving the status of variables or data objects. The points at which variables get values and the points at which these values are used are the focus of data flow testing.

Branch testing

Branch testing is a testing approach whose primary purpose is to ensure that each of the possible branches from each decision point gets tested at least once, ensuring that all reachable code is tested. Each outcome from a code module is evaluated as if the outcomes are binary, and you must test both True and False outcomes in branch testing.

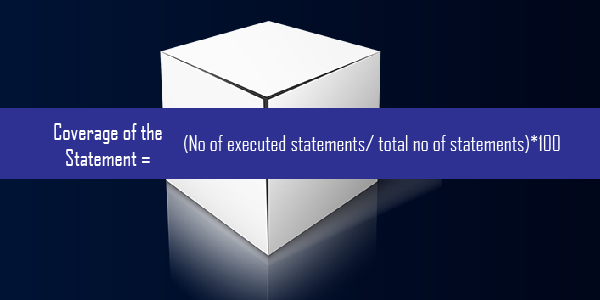

Statement coverage

The statement coverage technique entails running all of the source code’s statements at least once. It is used to determine the total number of executed statements in the source code based on the total number of statements in the source code.

Under the white box testing process, which is based on the structure of the code, statement coverage generates scenarios of test cases.

Decision coverage

Decision coverage, also known as branch coverage, is a testing technique that ensures that each conceivable branch from each decision point is tested at least once, ensuring that all reachable code is performed.

That is to say, every decision is made in both true and erroneous ways. It aids in verifying all of the code’s branches, ensuring that none of them leads to anomalous application behaviour.

Path testing

Path Testing is a technique for developing test scenarios. The control flow graph of a programme is designed to determine a collection of linearly independent paths of execution in the path testing approach. The number of linearly independent paths is determined using cyclomatic complexity, and then test cases are generated for each path.

Function coverage

The phrase “functional coverage” refers to how much of a design’s functionality has been tested by a verification environment. It necessitates the creation of a list of functions to be tested, the gathering of data that demonstrates the functionality of concern being used, and the analysis of the obtained data.

Conclusion

When compared to other types of penetration testing, white box testing costs are higher and are highly developer focused.

White hat testing is best suited for applications that are in the development stage because of its ability to assess the source code at extreme details and precision that can help prevent future threats.